Website-Relaunch für das Staatliche Institut für Musikforschung

31.05.2017Calculating the Sounds of Music

How did machines come to make music? A short history of computer music, in the words of the curator of the “Good Vibrations” exhibition.

At a concert they attended together in the late 1950s, electrical engineer Max Mathews and his boss John Pierce had a groundbreaking idea: why not write a computer program that could generate musical sounds? They had heard works by Arnold Schönberg and Artur Schnabel that evening and, while impressed by the former, they were rather disappointed by the latter. Pierce is said to have told Mathews that a computer could do a better job and instructed him to write a program that could do just that. At the time, they were both working at Bell Laboratories in Murray Hill, New Jersey.

About a year later, Mathews presented a musical composition. It lasted a mere seventeen seconds, but it had been generated by the program that he had written: Music I. A huge IBM 704 mainframe computer was needed to perform the piece. That event, in 1957, marked the dawn of the age of computer music. Pierce's vision of a convincing musical performance wouldn’t be realized for a long time to come, as the program was initially limited to experimental applications and the sounds that it generated were very simple. What’s more, the computation times were long – several times longer than the sound sequence itself – and the program was run using low-level instructions, so it remained well out of reach for any musician not trained in programming.

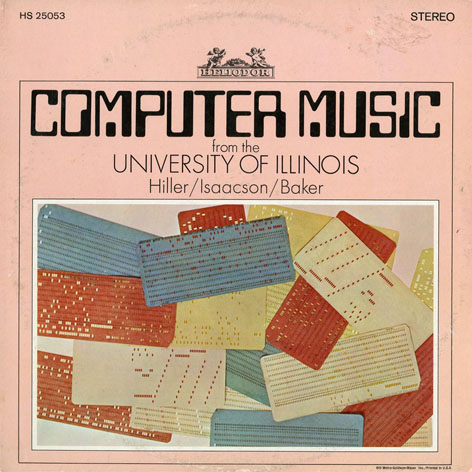

The next several decades saw the development of a wide range of systems for programming music, due in no small measure to research work done at institutions such as Princeton, Stanford, MIT (Massachusetts Institute of Technology), the University of Illinois, and the Institute of Research and Coordination in Acoustics/Music (IRCAM) in Paris. As the hardware achieved faster processing times and became easier to operate, these systems gradually became more popular among musicians.

Computers learned to play music – and compose it

From the very beginning, a distinction was made between two conceptually different types of use: that of composing with the aid of a computer and that of generating sounds with the aid of a computer. In the early stages of computer music, while Bell Labs was experimenting with the generation of sound using Music I, others were exploring the use of computers as a purely compositional tool. In 1957, the same year that Music I was presented, the ILLIAC I computer at the University of Illinois, programmed by Lejaren Hiller and Leonard Isaacson, produced the “Illiac Suite” (later renamed “String Quartet No. 4”). This was the first score to be composed by a computer. For its acoustic rendition, however, the decision was made to use a traditional string quartet – a practice adopted somewhat later by Iannis Xenakis for the performance of his own computer-generated compositions.

Computer processing speeds continued to increase until, around the turn of the millennium, it became possible to synthesize sound in real time. This advancement, alongside the practice of algorithmic composition, increasingly blurred the boundaries between the two types of use to the extent that, today, it is sometimes difficult to distinguish between the process of composition and that of real-time improvisation (using programs that are themselves essentially compositions).

In the late 1960s, attention turned to interface issues. One question was how to improve computer control methods by incorporating additional sensory channels – for example, cathode ray tube monitors, a focus of research at MIT. And, conversely, how could computers be used to operate external sound generators – for example, analog synthesizers, which Max Mathews integrated into his GROOVE system from 1970.

On the technical side, these questions were essentially answered by 1983 at the latest, with the introduction of the MIDI standard – a communications protocol for the transmission of musical data and control signals. After that, it was relatively easy to use computers as controllers for electronic musical instruments and, conversely, as sound generators for external controllers, which were being developed in great numbers.

Computers became standard equipment in recording studios

At the same time, the computer – mostly in the form of personal computers – was also gaining hold in commercial music studios, where its efficient emulation of traditional analog media infrastructure soon made it indispensable. Some of the most popular emulation programs – called digital audio workstations (DAW) – were Cubase, Logic, and ProTools. Soon afterwards, the German company Steinberg established the VST (Virtual Studio Technology) standard, and the integration of software instruments in production processes became a regular feature in DAW systems.

The use of computers to generate sound is now commonplace, and has been for quite some time. Programs for generating, editing, recording, and playing sounds have long been part of the virtual toolbox used by every producer – and many musicians. Thanks to computer technology, it is now increasingly common for a single individual to master both these occupations, which were once completely independent.

The degree to which computers are used in the creative process varies greatly. They are probably employed most commonly as compact and versatile studio tools, for the most part in recording and production processes. But it is not unusual for them to be used as musical instruments, or at least as part of instrumental arrangements in which they are tasked with the instruments’ sound synthesis.

In live coding, for example (a form of live performance art that has been around for about fifteen years), sounds are generated and controlled in real time by writing source code in specialized languages such as Max/MSP, SuperCollider, ChucK or Sonic Pi. The same programming environments also are used in experimental digital interface development as sound engines – that is, as sound-generating components that make it easy to explore a wide variety of sounds. In the context of popular music, standardized configurations of hardware and software, such as Ableton’s “Live” (in conjunction with the dedicated hardware controller “Push”) or Native Instruments’ “Maschine”, are performance-ready, versatile instrumental setups that are marketed by their makers as contemporary types of musical instrument.

Lastly, following in the tradition of Hiller und Isaacson, people are experimenting with many different processes of algorithmic composition. They want to see how computers calculate compositions by themselves on the basis of different sets of rules and, of course, they want to hear the results in acoustic form. Unlike in the 1950s, however, these compositions are usually played or printed by the computer itself and output either as a sound file or as a score.

What taste in music do machines have?

The release of several computer-generated pop songs made headlines last year. The songs were the product of a Sony AI (artificial intelligence) program called Flow Machines, which works with a music database containing a huge number of scores from different genres. The self-learning program analyzes and identifies the characteristic parameters of a particular style of music, say that of the Beatles, and synthesizes the results in a song – in the case of the Beatles-inspired release, that song was Daddy’s Car. The experiment was not entirely free of human influence, however: French song writer Benoit Carré added the finishing touches in the form of lyrics, arrangement, and production. He had the following to say about the shared creative process: “The machine does not pass any judgment on what it produces. This forces me to question my own decisions. Why this melody and not the previous one? With every step I make, I see my shadow taking shape – mine or Flow Machines’, I don’t know which.” In light of such intensely cooperative compositional practice, Tom Jenkinson’s vision of music making as a collaborative process in which machines and their users are equal partners – as laid out in his 2004 manifesto Collaborating with Machines – would appear to have come to fruition.